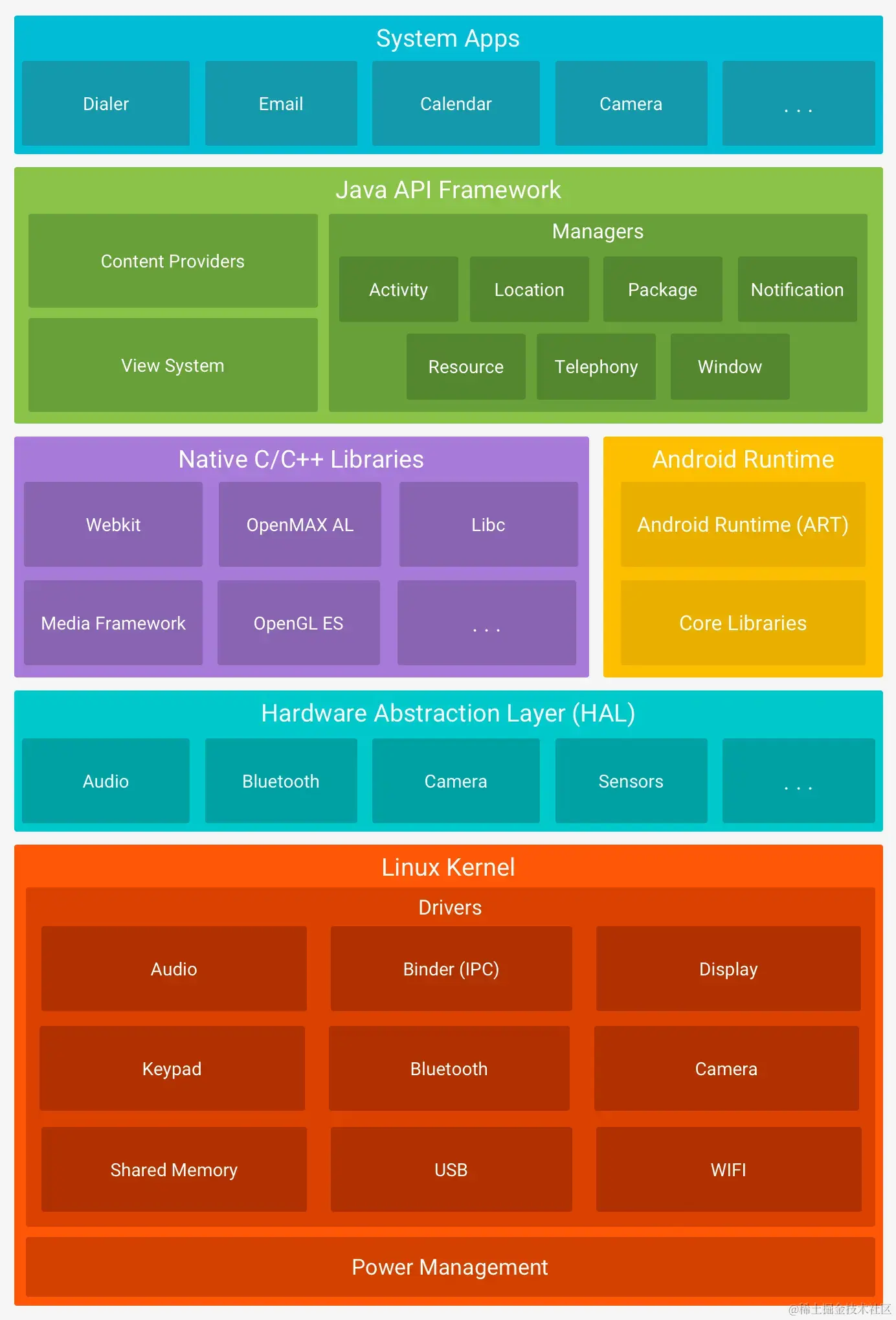

The figure above is the overall architecture of Android. Android Runtime is to Android what the heart is to the human body. It is the environment where Android programs are loaded and run. This article mainly focuses on the Android Runtime part and explores the development and current status of Android Runtime. It also introduces the feasibility of using Profile-Guided Optimization (PGO) technology to optimize application launch speed.

Evolution of App Runtime

JVM

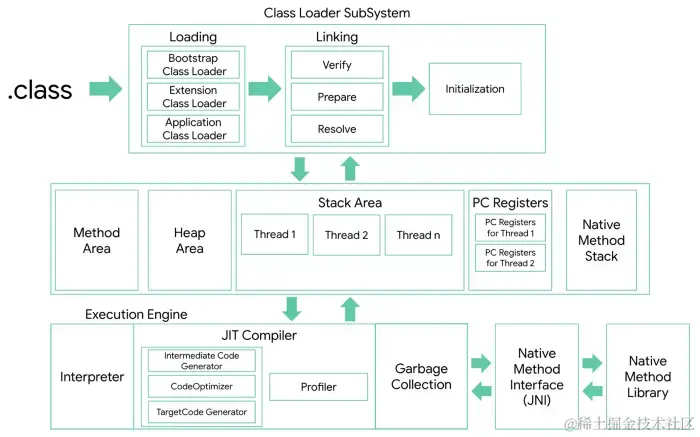

Native Android code is written in Java or Kotlin and compiled into .class files by javac or kotlinc. Before Android, these .class files would be input into the JVM for execution. The JVM can be simply divided into three subsystems: Class Loader, Runtime Data Area, and Execution Engine. Among them, the Class Loader is mainly responsible for loading classes, verifying bytecode, linking symbol references, allocating memory for static variables and static methods, and initializing them. Runtime Data is responsible for storing data, divided into method area, heap area, stack area, program counter, and native method stack. The Execution Engine is responsible for executing binary code and garbage collection.

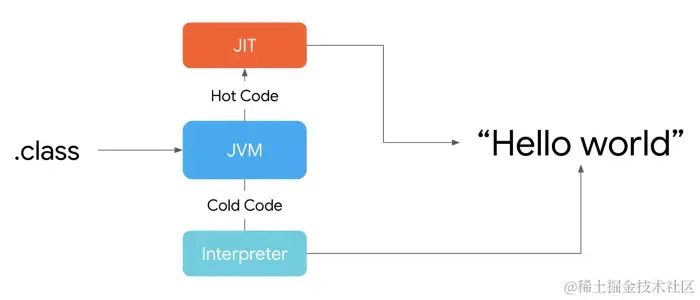

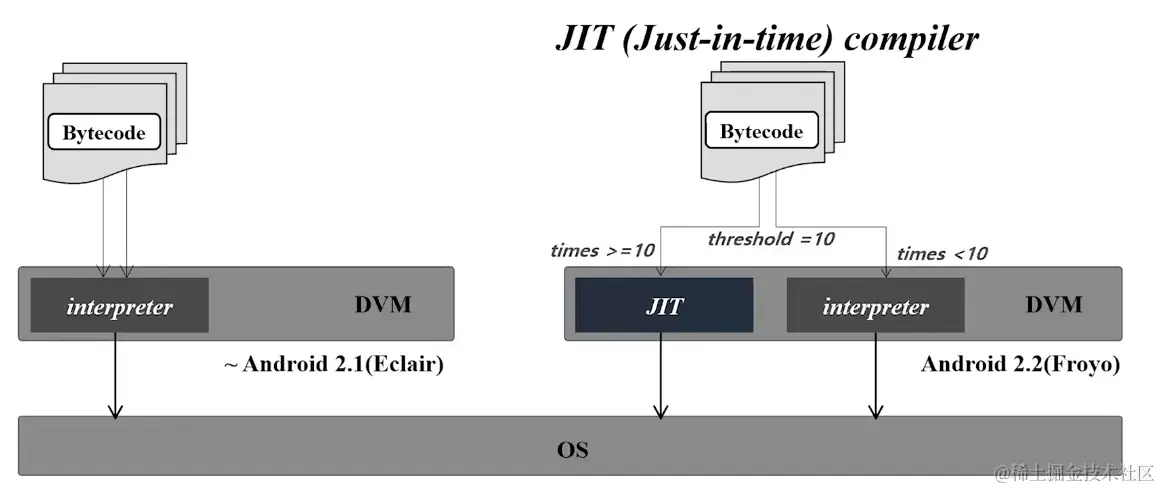

In the Execution Engine, Interpreter or JIT execution is used. Interpreter means interpreting the binary code during execution. Interpreting the same binary code each time is wasteful, so hot binary code will be JIT compiled into machine code for faster execution later.

DVM (Android 2.1/2.2)

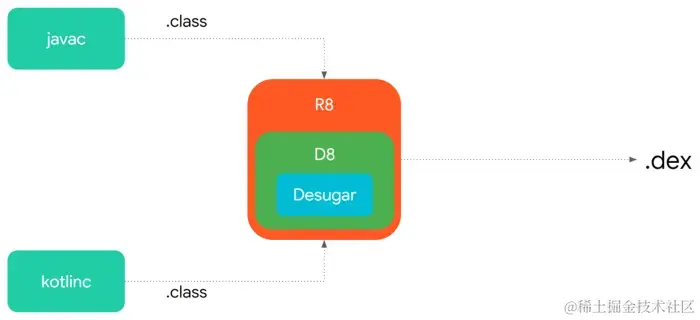

JVM is a stack-based runtime environment. Mobile devices have higher requirements for performance and storage space, so Android uses the register-based Dalvik VM. To convert from JVM to DVM, we need to convert .class files to .dex files. Converting .class to .dex goes through 3 processes: desugar -> proguard -> dex compiler. These 3 processes gradually changed to proguard -> D8 (Desugar) and finally evolved to just one step today: R8 (D8 (Desugar)).

We mainly focus on the differences between the Android Runtime Engine and the JVM. In the early versions of Android, there was only the Interpreter. In Android 2.2, JIT was introduced. At this point, the difference between Dalvik and JVM Runtime Engine was not huge.

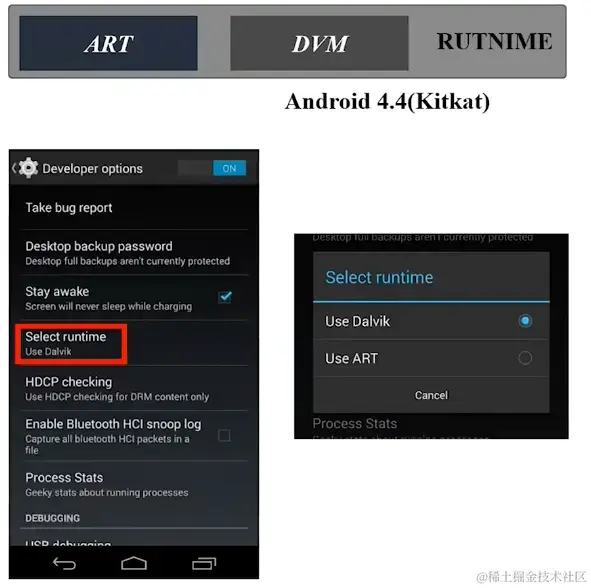

ART-AOT (Android 4.4/5.0)

To speed up application launch and experience, Android 4.4 introduced a new runtime environment ART (Android Runtime). In Android 5.0, ART replaced Dalvik as the only runtime environment.

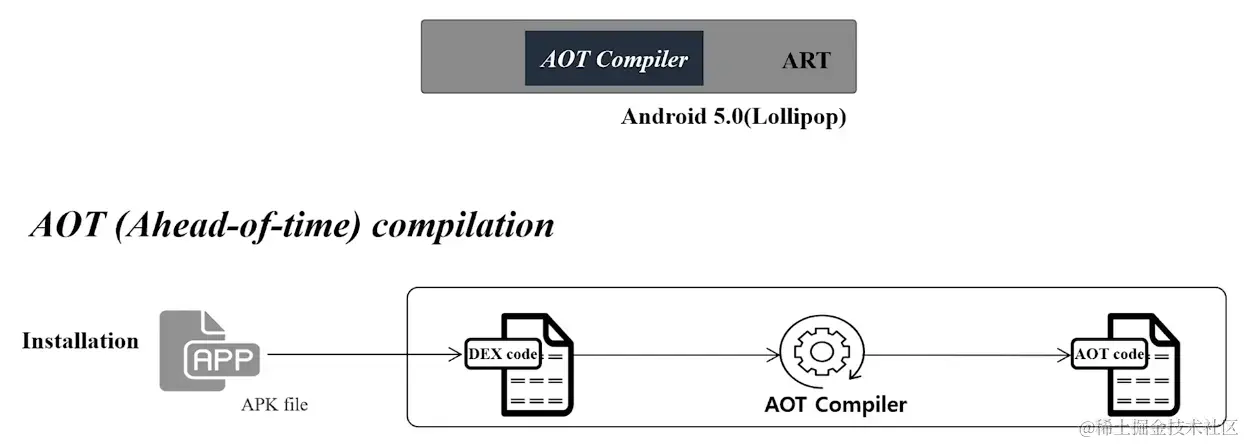

The ART runtime uses AOT (Ahead-of-time) compilation. That is, when an app is installed, the .dex is precompiled into machine code, generating a .oat file after AOT compilation. This greatly speeds up launch time because there is no need for interpretation compilation when launching and executing the app.

However, AOT brought the following two problems:

- Application install time increased significantly. Compiling everything to machine code during installation takes quite some time, especially when upgrading the system and needing to recompile all apps, resulting in the classic upgrade wait nightmare.

- Apps occupy too much storage space. Since all apps are compiled into .oat machine code, the storage space occupied by apps increases greatly, exacerbating the already limited storage space.

Further thinking shows that compiling everything may be unnecessary, because users may only use part of an app's commonly used features, and loading larger machine code after full compilation will occupy IO resources.

ART-PGO (Android 7.0)

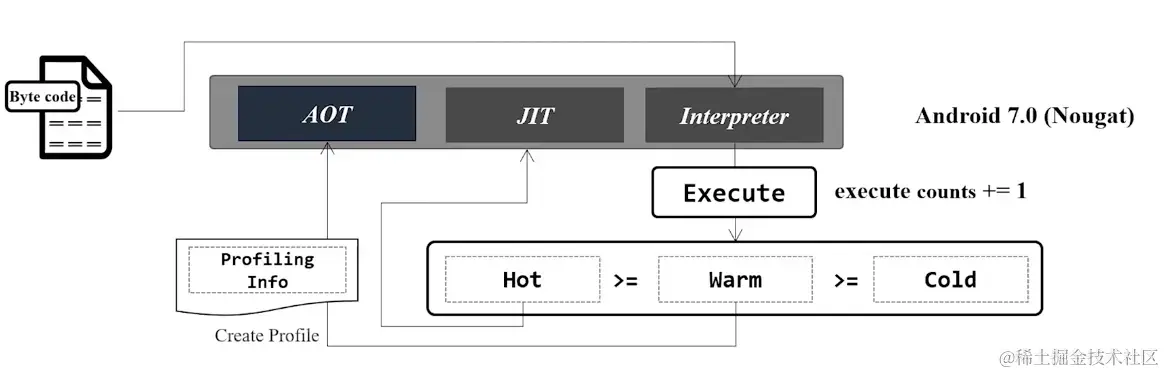

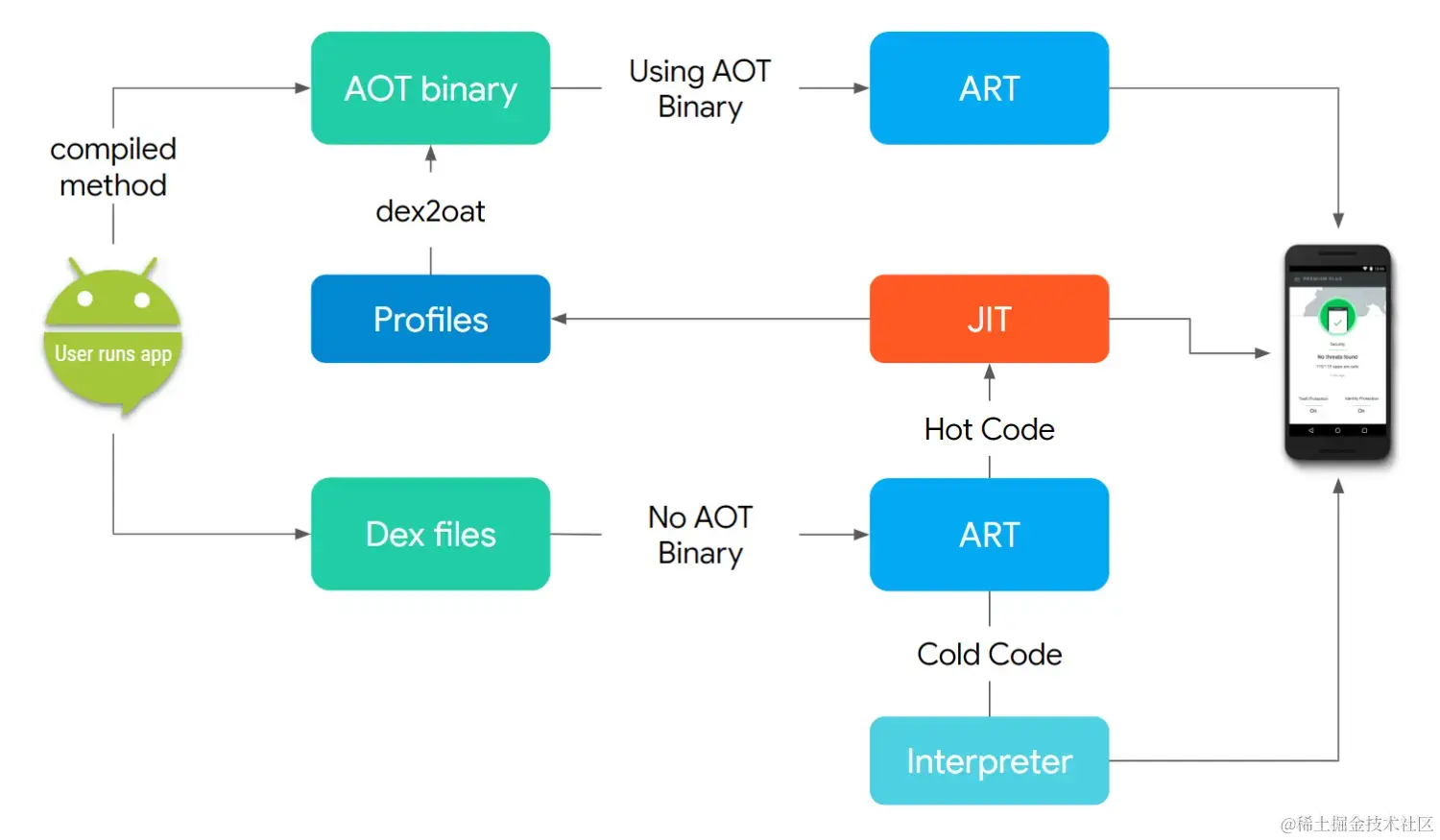

From Android 7.0, Google reintroduced the JIT compilation method instead of compiling the entire application. Combining the advantages of AOT, JIT, and Interpreter, the PGO (Profile-guided optimization) compilation method was proposed.

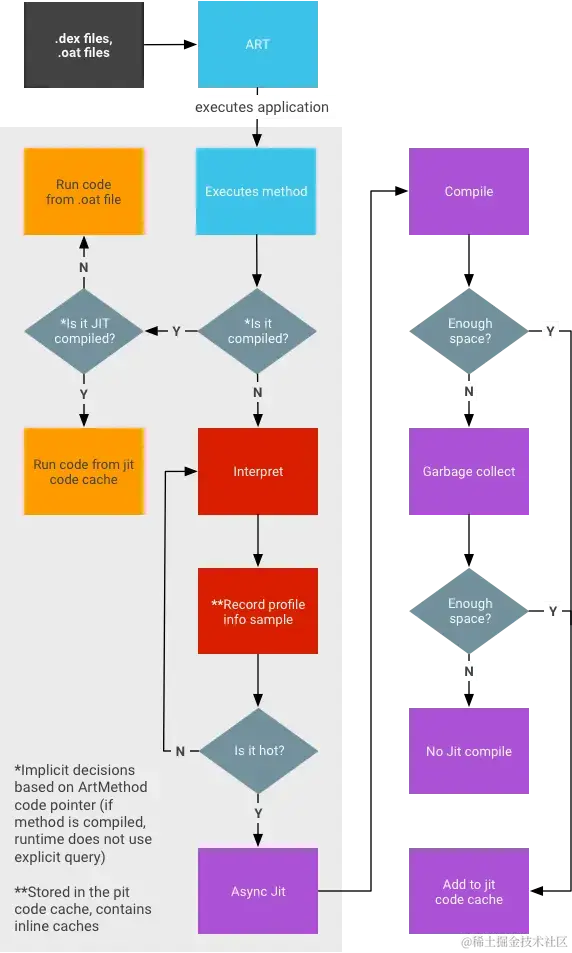

During app execution, Interpreter is used to interpret directly first. When some binary code is called many times, a Profile file is generated to record these methods. When binary code is frequently called, JIT compilation is performed directly and cached.

When the app is idle (screen off and charging), the compilation daemon will perform AOT compilation based on the Profile file.

When the app is reopened, code that has undergone JIT and AOT compilation can execute directly.

This strikes a balance between app install speed and app open speed.

JIT Workflow:

ART-Cloud Profile (Android 9.0)

However, there is still a problem. When users install an app for the first time, there is no AOT optimization at all. It usually takes multiple uses by the user before launch speed gets optimized.

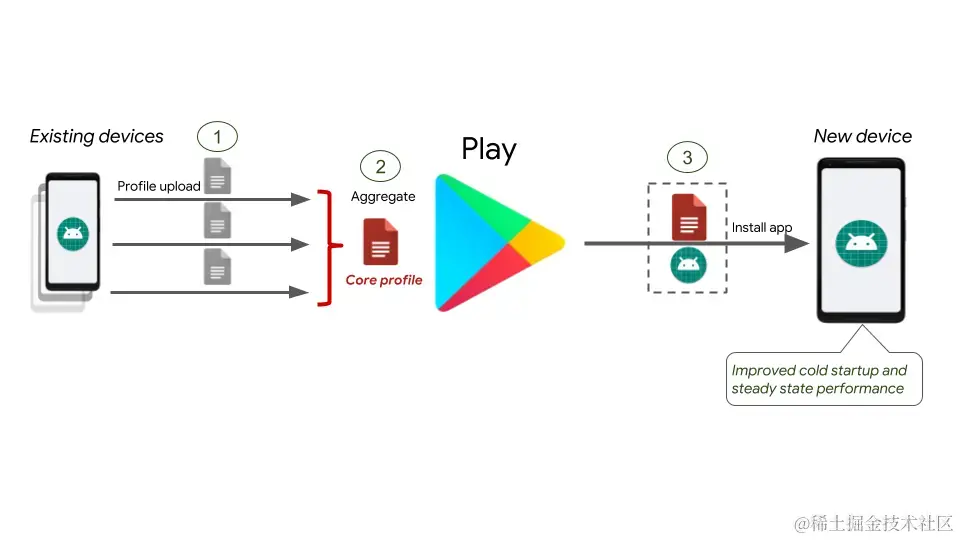

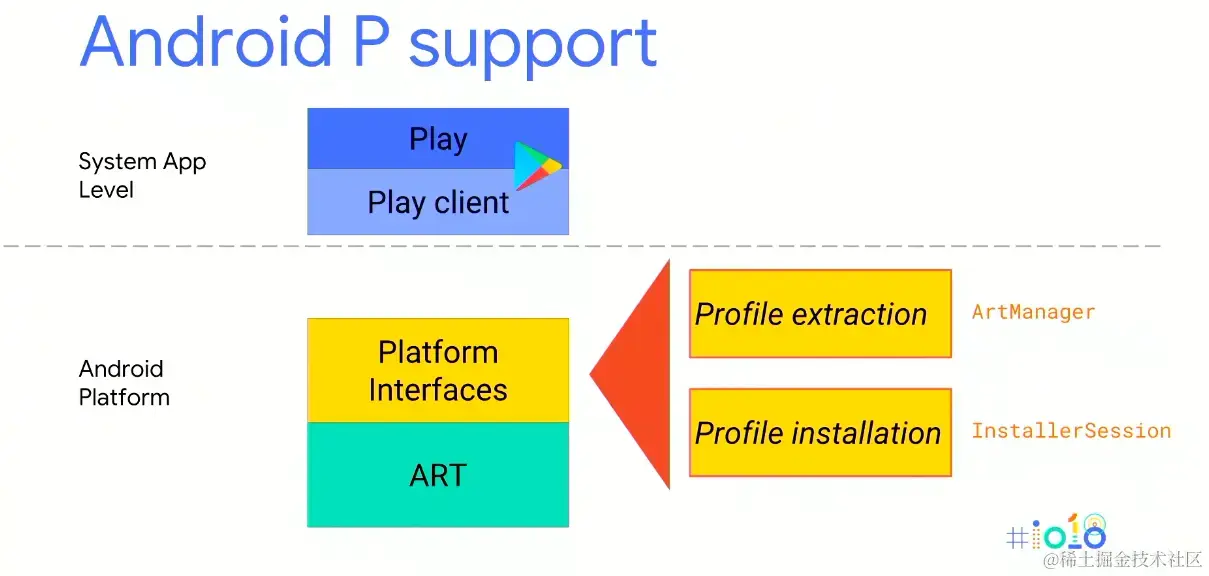

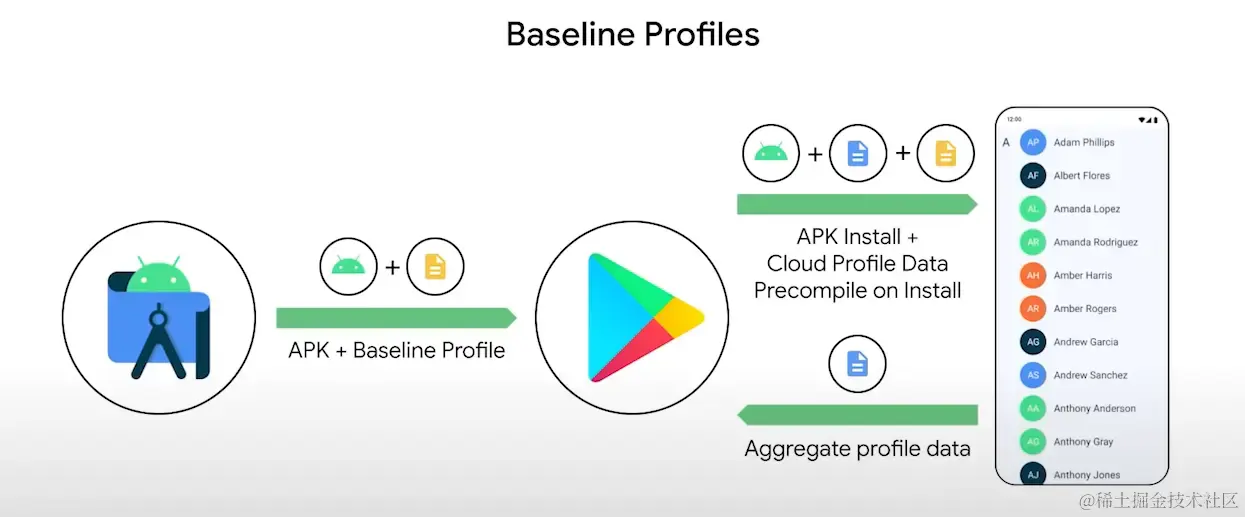

Considering that an app typically has code that users frequently execute (e.g. startup and commonly used features) and most of the time there are prior versions for collecting Profile data, Google considered uploading user-generated Profile files to Google Play while also attaching the Profile file during app installation for partial AOT compilation based on it. This allows faster launch speed on first open after installation.

Cloud Profile requires app store support. Google Play has very low market share in China, so cloud profile optimization is almost useless here. However, the Profile mechanism provided a thought on startup optimization. Two problems have always plagued us: the first is how to generate the Profile file, and the second is how to use the generated Profile file. The solution to the first problem is still relatively straightforward, because running the app a few times will generate the profile file, so we can collect this file from the file system manually. But how to collect in a more automated way is still a problem. For the second problem, we know the final location where the Profile file is generated, so we can place the generated file in the corresponding system directory. However, most phones and apps do not have permissions to place this file directly. Therefore, the Profile optimization technology has not been implemented until Baseline Profile gave us hope.

Baseline Profile

Baseline Profile is a set of tools for generating and using Profile files. It came into view in January 2022 and gained widespread attention at Google I/O 2022 along with Jetpack changes. The background is that Google Map increased release frequency, and new versions came out before Cloud Profile was fully collected, causing Cloud Profile to fail. Another background is that Jetpack Compose is not system code, so it is not fully compiled into machine code, and the Jetpack Compose library is quite large, so app launch using Jetpack Compose before Profile generation causes performance issues. Finally, to solve these problems, Google created BaselineProfileRule Macrobenchmark to collect profiles and ProfileInstaller to use profiles.

Using the Baseline Profile mechanism can accelerate app launch on Android 7+ phones, because as mentioned above, Android 7 already has PGO (Profile-guided optimization) compilation. The generated Profile file is packaged into the apk, and will also guide AOT compilation in combination with Google Play Cloud Profile. Although Cloud Profile is basically unusable in China, Baseline Profile can be used independently of Google Play.

After using Baseline Profile, Youdao Dictionary saw a 15% improvement in cold start time from online statistics.

This article mainly introduces the evolution of Android Runtime and its impact on app launch. In the next article, I will introduce in detail the Profile & dex file optimization, Baseline Profile library principles, and practical operation issues. Please look forward to it!

Comments